Artificial intelligence (AI) is increasingly used in financial services, but many systems operate as opaque "black boxes", making their decisions difficult to understand. This lack of transparency creates challenges for regulatory compliance and erodes customer trust. Explainable AI (XAI) solves this by providing clear reasoning behind decisions, such as loan approvals or fraud alerts, ensuring compliance with regulations like GDPR and the U.S. Equal Credit Opportunity Act.

Key points:

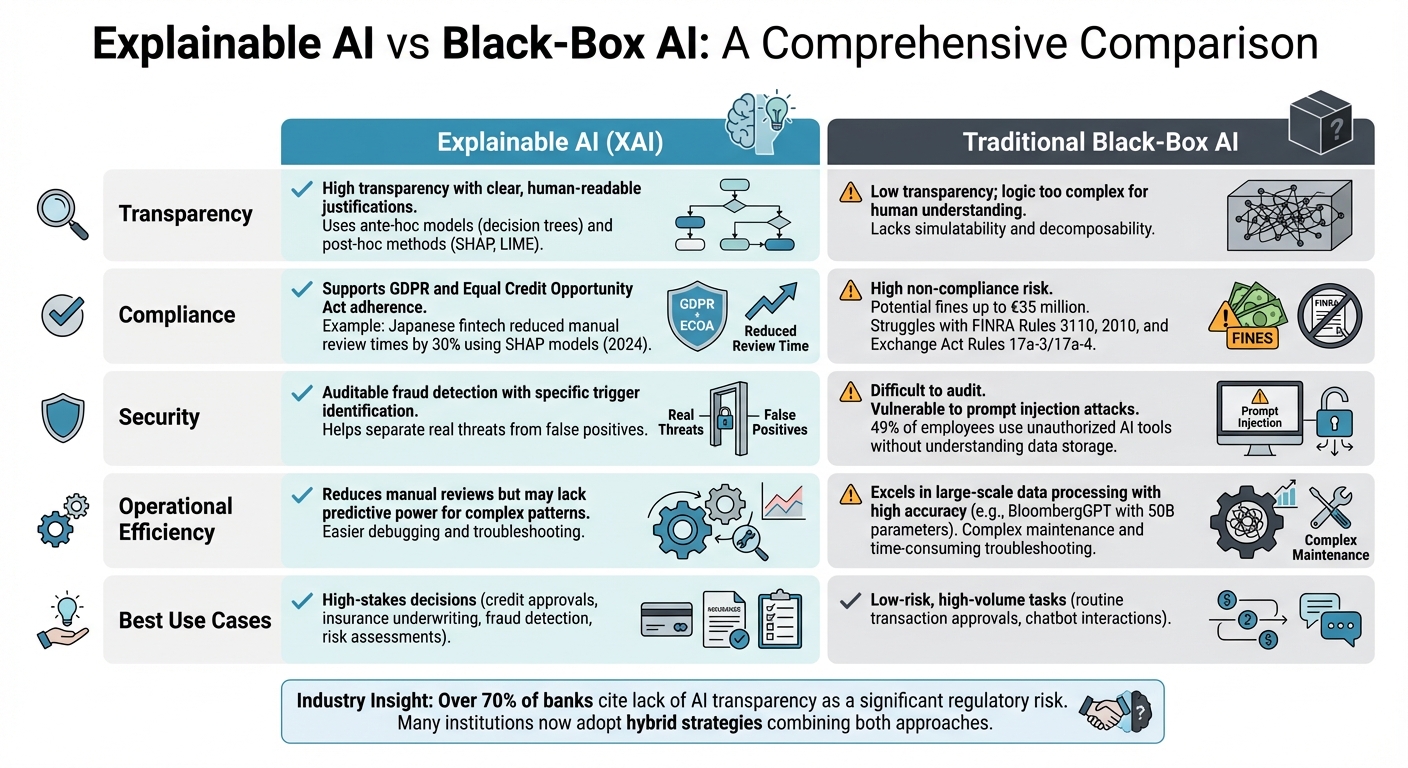

- XAI offers clarity by breaking down decision factors (e.g., debt-to-income ratios) and uses tools like SHAP and LIME for interpretability.

- Traditional black-box AI excels at handling complex data but lacks transparency, posing risks for compliance and security.

- Financial institutions face a trade-off between the accuracy of black-box models and the transparency of XAI.

Quick takeaway: XAI is essential for high-stakes decisions, like credit approvals and fraud detection, while black-box AI works better for low-risk, high-volume tasks. Many organizations now combine both approaches to balance performance with regulatory and operational needs.

Black Box or Glass Box? Building Explainable AI in Finance (CFO Panel)

sbb-itb-17e8ec9

1. Explainable AI (XAI)

Explainable AI (XAI) sheds light on how opaque algorithms make decisions. Instead of simply delivering a credit decision or fraud alert, XAI breaks down the contributing factors - like debt-to-income ratio, transaction timing, or credit history - that influenced the outcome. Tools such as SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-agnostic Explanations) help quantify the role each data point played in the final result.

Transparency

XAI achieves clarity in two key ways. First, ante-hoc models - like decision trees - are designed to be inherently understandable. Second, post-hoc methods apply interpretability techniques to more complex systems after predictions are made. Counterfactual explanations add another layer of insight. For instance, they might show that lowering a debt-to-income ratio by 5% could lead to loan approval. This level of transparency is crucial for meeting strict regulatory requirements.

Compliance

Regulatory frameworks are increasingly demanding that automated decisions be explainable. Laws like the EU's GDPR and the US Equal Credit Opportunity Act establish a "right to explanation", making XAI not just helpful but legally required. For example, in 2024, a Japanese fintech firm used SHAP-based models to justify loan decisions to the Financial Services Agency (FSA). This approach cut manual review times by 30% while ensuring transparency and compliance. Clear, documented decision-making also shields organizations from lawsuits and regulatory penalties by ensuring every automated decision can be audited.

Security

XAI is a game-changer for fraud detection. It pinpoints specific triggers - like unusual transaction amounts, irregular timings, or suspicious locations - that signal potential fraud. This precision allows security teams to fine-tune anti–money laundering strategies and respond faster than traditional manual audits. By highlighting the factors that matter most, XAI helps analysts separate real threats from false positives, streamlining investigations and improving overall security.

Operational Efficiency

There’s often a concern that making AI systems more transparent could slow them down. However, hybrid approaches prove that speed and accountability can go hand in hand. High-performance models can handle rapid predictions, while interpretable models step in to provide clear explanations. This combination ensures institutions can maintain both efficiency and accountability.

2. Traditional Black-Box AI

Traditional black-box AI systems, like deep learning models and neural networks, function as closed systems. They produce predictions and decisions without revealing the reasoning behind them. As Cheryll-Ann Wilson, PhD, CFA, puts it:

"Systems based on deep learning algorithms in particular can become so complex that even their developers cannot fully explain how these systems generate decisions".

This lack of transparency introduces challenges in areas like regulatory compliance, security, and maintaining these models efficiently.

Transparency

Black-box models, unlike explainable AI (XAI), do not shed light on how they make decisions. They lack simulatability (the ability to simulate their behavior) and decomposability (breaking down their logic into understandable parts). For instance, if a neural network denies a loan or flags a transaction as suspicious, it doesn’t clarify which data points influenced that decision. This opacity complicates debugging - developers can’t easily pinpoint which features need adjustment or address issues like overfitting. Over time, this can lead to declining model performance.

Compliance

In the U.S., financial regulations emphasize accountability, something black-box systems often fail to deliver. For example, FINRA Rule 3110 requires firms to supervise all business activities and explain how decisions are made. Black-box systems also risk breaching fair lending rules under FINRA Rule 2010 because hidden biases in training data - like using zip codes that indirectly reflect protected attributes - can go undetected without transparency tools.

Additionally, Exchange Act Rules 17a-3 and 17a-4 mandate accurate recordkeeping. The dynamic nature of self-learning models complicates this requirement, as their outputs can evolve in ways that are hard to trace. Without clear explanations, regulatory compliance becomes a significant challenge, making it essential to find a balance between performance and accountability.

Security

The opaque design of black-box AI exposes it to various security risks. Shadow AI is a growing concern - 49% of employees use unauthorized AI tools, and more than half don’t understand how their data is stored or processed. Black-box models, especially large language models, are particularly vulnerable to prompt injection attacks. In these attacks, hidden instructions in emails or documents can manipulate the AI into revealing sensitive information. This lack of transparency also makes it harder to spot misleading outputs, which could result in financial losses.

Operational Efficiency

Despite their impressive predictive capabilities, black-box models - like BloombergGPT, a 50-billion-parameter financial transformer - come with significant operational challenges. Their complexity makes troubleshooting and maintenance far more time-consuming. When unexpected results arise, developers are left to investigate without clear guidance on which data or features caused the issue. This inefficiency often forces organizations to run these models alongside existing systems, increasing resource demands.

Pros and Cons

Explainable AI vs Black-Box AI in Financial Services Comparison

When it comes to choosing between Explainable AI (XAI) and traditional black-box AI, the decision often boils down to balancing transparency, compliance, and performance. Each approach has its own strengths and limitations, making it crucial to align the choice with regulatory needs and operational goals.

Explainable AI (XAI) shines in situations where transparency is non-negotiable. It provides clear, human-understandable justifications for its outputs, which is a big win for meeting legal and regulatory standards. This level of clarity also fosters trust among stakeholders and simplifies debugging, as developers can easily identify underperforming parts of the model. However, this transparency often comes with a trade-off: XAI models may lack the sophistication needed to capture the intricate patterns that more complex deep learning models excel at.

Traditional Black-Box AI, on the other hand, is a powerhouse for handling complex datasets. Its computational strength enables it to deliver high predictive accuracy, making it ideal for tasks that demand performance over interpretability. But this complexity can pose a serious challenge in regulated industries. For instance, over 70% of banks cite the lack of transparency in AI systems as a significant regulatory risk.

The decision between these approaches often depends on an organization’s appetite for risk and its regulatory obligations. As Chris Gufford from nCino aptly puts it:

"Explainability in AI is similar to the transparency required in traditional banking models - both center on clear communication of inputs and outputs".

Interestingly, many financial institutions are now adopting hybrid strategies. They use black-box models for high-volume, low-stakes tasks while relying on interpretable models for critical decisions like credit approvals and risk assessments.

| Feature | Explainable AI (XAI) | Traditional Black-Box AI |

|---|---|---|

| Transparency | High; provides clear, human-readable justifications | Low; logic is too complex for human understanding |

| Compliance | Supports adherence to GDPR and fair lending laws | High risk of non-compliance; fines up to €35 million |

| Security | Auditable but may expose sensitive data | Difficult to audit; hidden vulnerabilities possible |

| Operational Efficiency | Reduces manual reviews; may lack predictive power | Excels in large-scale data processing with high accuracy |

| Debugging | Easier to troubleshoot and adjust | Harder to identify and fix issues like model drift |

Conclusion

Deciding between explainable AI (XAI) and black-box AI comes down to weighing risks against regulatory expectations. Kelly Bailey from the Corporate Finance Institute points out:

"If you can't articulate how your AI makes decisions, a financial regulator may question its validity during an audit".

This underscores the importance of transparency in shaping AI strategies for financial institutions.

XAI plays a crucial role in areas where decisions carry high stakes, such as credit approvals, insurance underwriting, fraud detection, and risk assessments. These tasks demand transparency to meet regulatory requirements. The dangers of relying on opaque AI models are clear - when outcomes can't be explained, institutions face significant compliance hurdles with both regulators and customers.

For lower-risk, high-volume tasks like routine transaction approvals or chatbot interactions, black-box models can still be effective. Many organizations find success by adopting hybrid strategies, combining the speed and accuracy of black-box models with tools like SHAP or LIME to provide explanations when needed. The key is tailoring explainability to the purpose of the application, not just the complexity of the model. As Jochen Papenbrock from NVIDIA emphasizes:

"The requirements for explainability and fairness should, as a leading principle, depend on the application purpose of a model rather than on the choice of its model design".

FAQs

How does Explainable AI enhance compliance in financial services?

Explainable AI is transforming compliance in financial services by making AI-driven decisions more transparent and easier to understand. Through methods like SHAP values (which explain the impact of each feature on a model's predictions) and rule extraction (breaking down complex models into human-readable rules), financial institutions can clearly showcase how decisions are made in areas like AML (Anti-Money Laundering), KYC (Know Your Customer), and risk assessments.

This level of clarity serves multiple purposes. It helps identify and reduce potential biases, ensures compliance with regulations, and provides detailed, auditable records for regulatory reviews. By adopting Explainable AI, organizations not only meet strict compliance standards but also reinforce trust and accountability in their operations.

How is Explainable AI different from traditional black-box AI?

Explainable AI takes a different approach from the traditional black-box AI by offering clear and understandable insights into how decisions are reached. It relies on transparent models or explanation techniques to shed light on the reasoning behind predictions. This openness not only helps build trust but also supports compliance with regulations and improves decision-making processes.

On the other hand, black-box AI produces results without offering any glimpse into its internal workings, making it challenging to audit or validate. Explainable AI proves particularly useful in fields like finance, where accountability, security, and adherence to regulations are absolutely essential.

How does combining Explainable AI with black-box AI benefit financial institutions?

Financial institutions can gain the best of both worlds by combining the precision and speed of black-box AI models with the clarity and accountability offered by Explainable AI (XAI). While black-box models are excellent at delivering accurate predictions, their lack of transparency can create hurdles, especially in highly regulated sectors like finance.

Incorporating XAI techniques helps institutions shed light on how decisions are made. This not only ensures compliance with regulatory requirements but also builds trust with stakeholders by making processes more transparent. This balanced approach allows organizations to embrace cutting-edge technology while maintaining responsibility, making it particularly effective for navigating complex financial data and making high-impact decisions.