AI pricing models can boost efficiency and personalization but often risk unethical outcomes. Addressing bias, transparency, and data privacy is crucial to avoid regulatory fines and maintain customer trust. Here's how to build ethical AI pricing systems:

- Eliminate Bias: Audit datasets to remove discriminatory patterns and avoid "proxy discrimination" (e.g., zip codes reflecting race). Use diverse data and fairness-aware techniques to ensure equitable outcomes.

- Increase Transparency: Avoid "black box" models. Opt for interpretable systems like decision trees or use tools to clarify opaque algorithms. Explain pricing logic clearly to customers, offering "what-if" tools or contrastive examples.

- Ensure Data Privacy: Comply with laws like GDPR and CCPA by handling sensitive data responsibly. Document decisions and set up real-time monitoring for risks like model drift or misuse.

- Audit and Monitor: Conduct regular internal and external reviews, track fairness metrics, and maintain audit trails to stay compliant with evolving regulations.

- Fair Pricing Strategies: Use tiered or outcome-based pricing to align costs with value delivered. Offer safeguards like usage alerts or caps to prevent unexpected charges.

Key takeaway: Ethical AI pricing isn't just about compliance - it builds trust, strengthens customer relationships, and protects against legal risks. Startups that prioritize fairness and clarity in pricing can gain a competitive edge while ensuring long-term success.

#257 Can You Use AI-Driven Pricing Ethically? | Jose Mendoza, Academic Director & Professor at NYU

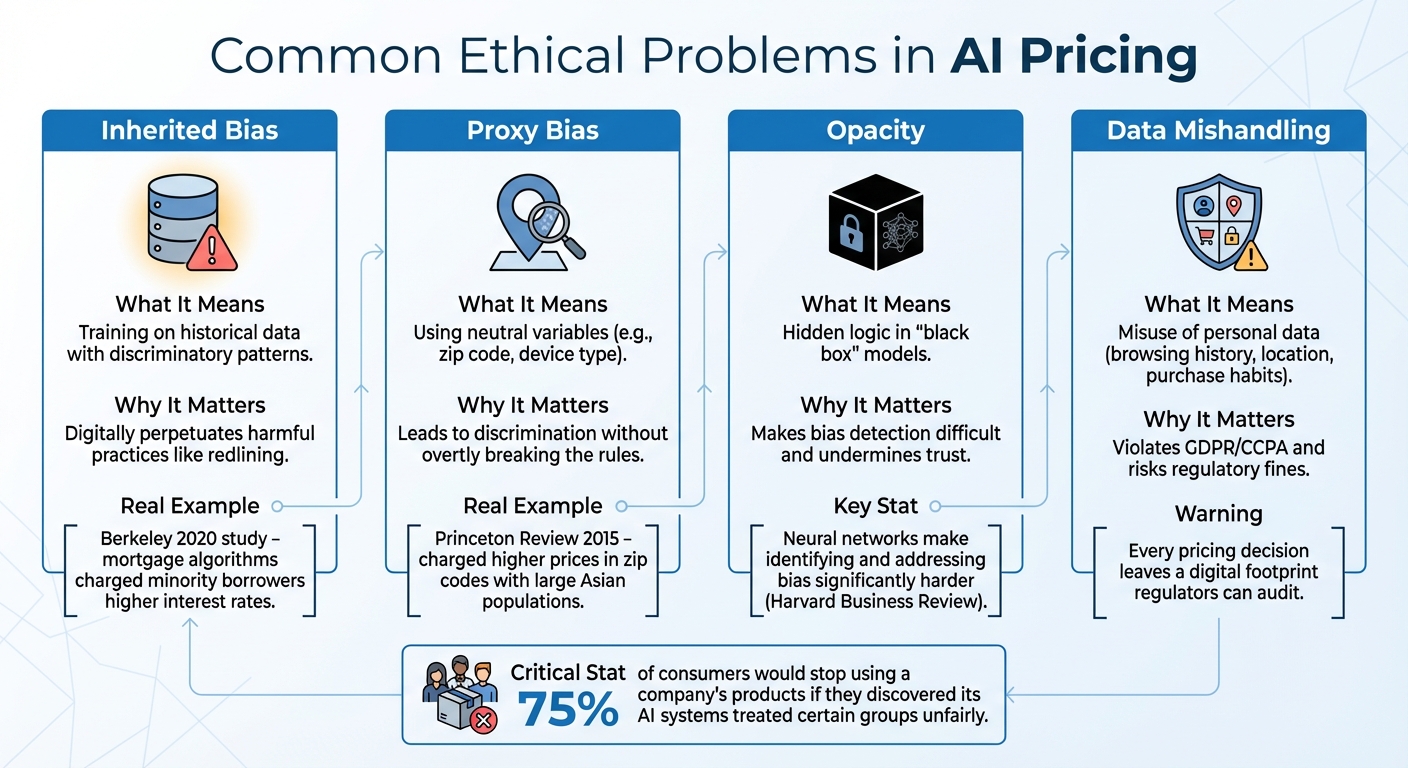

Common Ethical Problems in AI Pricing

Common Ethical Problems in AI Pricing Systems

Startups using AI-driven pricing systems often stumble into three major ethical challenges that can harm trust and invite legal trouble. Spotting these risks early allows you to build safeguards before issues spiral out of control.

Price Discrimination Risks

AI models often inherit biases from historical data, which they can then amplify on a massive scale. For instance, a 2020 study conducted by Berkeley researchers revealed that mortgage algorithms charged minority borrowers higher interest rates. These algorithms didn’t explicitly use race as a factor but relied on digital surrogates - like zip codes and browsing habits - that closely correlated with protected characteristics. This type of "proxy discrimination" is particularly tricky because it appears neutral on the surface but leads to unfair outcomes.

Another striking case occurred in 2015 when the Princeton Review's online tutoring service charged higher prices in zip codes with large Asian populations. The company claimed the algorithm was merely optimizing for market conditions, yet the public backlash was swift and severe. This example underscores how even well-meaning pricing systems can unintentionally produce discriminatory results when algorithms detect patterns tied to sensitive traits like race or ethnicity.

Beyond the issue of bias, the lack of transparency in AI pricing systems adds another layer of complexity.

Transparency Gaps

Deep learning models often function as a "black box", making it nearly impossible to understand how decisions are made. According to the Harvard Business Review, the complexity of these neural networks makes identifying and addressing bias significantly harder.

This lack of transparency creates a troubling information imbalance. Companies have access to vast troves of behavioral data - such as browsing history, device preferences, and time-of-day activity - while customers are left in the dark about how these factors influence the prices they’re offered. This imbalance not only erodes trust but also enables what some call "supercharged" price discrimination, where AI predicts a customer’s willingness to pay with incredible accuracy.

Opacity in decision-making also raises concerns about compliance with data privacy laws.

Data Privacy Concerns

AI pricing systems often rely on extensive personal data, which can lead to breaches of privacy regulations like the GDPR in Europe or the CCPA in California. For example, when an algorithm uses browsing history, location data, or purchase habits to determine prices, it’s processing sensitive information that must be handled carefully and disclosed transparently.

The stakes are even higher when pricing models make real-time adjustments based on dynamic data inputs. Every pricing decision leaves a digital footprint that regulators can audit, and improper handling of this data could result in significant legal and financial penalties.

| Risk Category | What It Means | Why It Matters |

|---|---|---|

| Inherited Bias | Training on historical data with discriminatory patterns | Digitally perpetuates harmful practices like redlining |

| Proxy Bias | Using neutral variables (e.g., zip code, device type) | Leads to discrimination without overtly breaking the rules |

| Opacity | Hidden logic in "black box" models | Makes bias detection difficult and undermines trust |

| Data Mishandling | Misuse of personal data | Violates GDPR/CCPA and risks regulatory fines |

How to Build Transparent and Explainable AI Systems

Transparent and explainable AI systems are key to maintaining ethical pricing practices by clarifying decision-making processes and earning customer trust. The challenge? Many high-performing AI models function as "black boxes", making it tough to explain why a specific price was assigned to a customer. The solution lies in building systems that offer clear, accessible logic for both your team and your customers.

Designing for Explainability

For high-stakes pricing decisions, opt for interpretable models like linear regression or decision trees. These models allow you to track exactly how inputs influence outputs, which is crucial when customers request clarity on how their personalized price was determined.

Additionally, provide both local explanations (specific to individual pricing decisions) and global insights (an overview of pricing logic). For instance, in 2024, Intercom introduced an outcome-based pricing model for its AI support agent, "Fin." Instead of charging based on usage or API calls, they implemented a fixed fee only when Fin successfully resolved a support ticket. This approach aligned the cost with the delivered value, building customer trust even though the company faced variable computational costs.

If you rely on "black box" models for performance, use post-hoc interpretability tools to reverse-engineer the decision-making process for stakeholders. For example, Progressive Insurance provides customers with explanations of the factors that influenced their personal insurance quotes, even though the underlying algorithm remains proprietary. This transparency gives customers actionable insights while protecting the company’s competitive edge.

When incorporating unconventional data like geolocation or browsing patterns, document why each attribute is used. Such documentation is vital during audits and helps your team identify potential issues, like proxy discrimination, before they affect customers.

A clear, interpretable design also simplifies ongoing audit processes.

Setting Up Audit Procedures

Transparent design is just the first step - ongoing audits ensure your system remains ethical over time. Conduct audits at three stages: before development, during development, and after implementation. This continuous cycle helps catch issues early and ensures your system adapts ethically as market conditions evolve.

Independent third-party audits are especially valuable. External reviewers can spot biases that internal teams might miss. For example, in 2022, Salesforce's Einstein AI platform established an "Office of Ethical and Humane Use of Technology" to oversee AI pricing applications. They provide detailed documentation on how pricing recommendations are generated and conduct regular bias audits using standardized fairness metrics. This transparency has increased customer confidence and adoption of their AI tools.

Maintain version control for your system and document every update, including the changes made, the reasoning behind them, and their potential impact. This audit trail becomes essential when regulators or customers question a pricing decision months later. You can also use "challenger models" - opaque systems that benchmark your transparent production model - to test performance without compromising clarity.

Finally, set up human oversight guardrails to monitor pricing decisions. Define clear limits for price differentials and create escalation paths for unusual pricing patterns. Also, establish conditions under which the pricing algorithm should be suspended if it fails to meet ethical or interpretability standards. These safeguards protect your company from unintended discriminatory outcomes.

Communicating Pricing Logic

Clear communication is vital - avoid overwhelming customers with technical jargon. Instead, provide concise, actionable explanations. Customers should not only understand why they received a specific price but also learn how they can influence future pricing.

Use contrastive explanations to address the questions customers actually ask. For example, instead of detailing how your entire system works, answer questions like, "Why is my price $50 instead of $40?". This targeted approach feels more relevant and less arbitrary to customers.

Interactive tools, like "what-if" scenarios, can further enhance understanding. These tools allow customers to explore how different inputs affect their pricing, turning explanations into hands-on learning experiences. For example, instead of using technical terms like "tokens" or "compute hours", translate these into business value with clear definitions and interactive price calculators.

Proactively notify customers about potential price changes before they happen to avoid "bill shock." Timely updates explaining pricing shifts build trust and give customers time to adjust. Treat these updates like software releases - include notes, reasoning, and rollback plans to maintain stakeholder confidence.

Lastly, ensure there’s a human point of contact available to handle customer queries or disputes regarding AI-driven pricing. While automated explanations are helpful, customers feel reassured knowing they can speak to a real person if the algorithm’s logic doesn’t make sense.

| Explanation Type | What It Addresses | Why Customers Care |

|---|---|---|

| Rationale | Technical logic and input features | Understanding the "why" behind a specific price |

| Responsibility | Accountability and oversight | Knowing who to contact to contest a decision |

| Fairness | Bias mitigation and safety | Ensuring the price is non-discriminatory |

| Impact | Individual and societal wellbeing | Trusting that the AI use does not cause harm |

How to Ensure Fair Pricing and Eliminate Bias

Ensuring fair pricing goes beyond transparency - it requires careful oversight of both data and models. To achieve this, you need to take deliberate steps to address potential sources of bias in your datasets, set up safeguards, and maintain ongoing review processes. By focusing on these areas, you can keep pricing equitable while staying ahead of potential issues.

Using Diverse Datasets

Bias often starts with the data you use. To minimize this, audit your training data to identify inaccuracies or imbalances that might misrepresent your customer base. Bias isn’t always tied to obvious factors like race or gender; subtle details like zip codes, device types, or browsing habits can act as proxies for sensitive characteristics.

To address this, ensure your data represents all relevant groups fairly. Augment datasets to include underrepresented populations, remove sensitive or irrelevant features, and validate your models against trusted benchmarks or "golden datasets" to catch potential fairness issues early.

"Diverse teams are an essential safeguard against building biased systems." - Microsoft

It’s also vital to involve a variety of perspectives during development. Homogeneous teams might miss biases that don’t affect them directly, so diversity in your development team can act as an additional layer of protection.

Implementing Guardrails

Guardrails are essential for preventing your algorithm from creating unfair pricing disparities. For instance, you can set limits on how much prices can vary across customer segments to avoid extreme outliers. Additionally, fairness-aware optimization techniques like MinDiff and Counterfactual Logit Pairing (CLP) can help balance error rates across different data groups. These methods ensure that changes in sensitive attributes don’t lead to biased predictions.

Pair these algorithmic measures with human oversight to quickly address any anomalies or unexpected outcomes.

Regular Review Cycles

Regular reviews are key to maintaining fairness over time. Conduct in-depth audits of your pricing structure annually, and supplement these with quarterly reviews to catch emerging issues. In fast-paced industries, monthly evaluations of core pricing metrics can help you stay ahead of market changes.

Automated monitoring is another crucial tool. Use 24/7 systems to detect model drift as input data evolves. This is especially important under regulations like the EU AI Act, which imposes steep penalties - up to 7% of global turnover - for companies that fail to document risk assessments for high-risk AI systems. Independent third-party audits can provide fresh perspectives and uncover biases that internal teams might overlook. Track key metrics such as reductions in bias scores, audit outcomes, and security incidents to measure the effectiveness of your safeguards.

| Audit Type | Frequency | Primary Focus |

|---|---|---|

| Deep Dive | Annual | Overall structure, tiers, and market positioning |

| Metric Check | Quarterly/Monthly | Conversion rates, discounting trends, and churn |

| Real-Time Monitoring | 24/7 | Model drift, adversarial attacks, and bias deviations |

sbb-itb-17e8ec9

Designing Accessible and Responsible Pricing Strategies

Creating ethical AI pricing requires finding the right balance between making your solutions accessible and maintaining profitability. By 2025, while 88% of businesses are expected to use AI in some capacity, only 58% will have figured out how to monetize their innovations effectively. To bridge this gap, pricing strategies should scale with the value customers receive while safeguarding them from unexpected costs. These strategies build on the ethical principles outlined earlier.

Let’s dive into pricing models that promote accessibility without jeopardizing financial stability.

Tiered Pricing Models

Tiered pricing provides customers with options tailored to their budgets by combining flat subscription fees with usage-based billing.

Take Browserbase as an example: they introduced tiered subscriptions with pre-packaged usage levels, catering to a range of users - from individual developers to large enterprises - while clearly communicating overage rates.

For ethical pricing, consider placing essential features that encourage adoption in the lower-cost tiers, while reserving advanced capabilities for premium plans. Tie pricing metrics, such as tokens or API calls, to actual costs. Additionally, implement safeguards like soft caps, usage alerts, or prepaid credits to help customers avoid unexpected bills and to manage infrastructure risks effectively.

Outcome-Based Pricing

Outcome-based pricing charges customers based on verified results, such as resolving a support ticket, ensuring that prices align with the value delivered.

In 2024, Intercom adopted this approach for its AI-powered support agent, "Fin." Instead of charging per message or user seat, Intercom set a fixed fee for each customer support ticket successfully resolved by Fin, directly tying the cost to the value provided.

"When customers believe the price matches the value, the pricing mechanism can matter less than the outcomes." – Stripe Billing

Before rolling out outcome-based pricing, it’s crucial to calculate the true unit cost for each AI request - including factors like inference, tokens, and storage. This ensures that your margins remain healthy despite potential cost variability. Testing the model with new customers or conducting small A/B tests can help gauge its impact on customer retention before scaling it up.

Balancing Revenue and Ethics

Ethical pricing strategies should not only drive revenue but also ensure fairness. For instance, setting limits on price differentials helps prevent algorithms from creating disparities that could be perceived as discriminatory.

AI infrastructure costs, particularly for GPU and compute resources, can change quickly. To stay aligned with market conditions, consider reviewing your pricing quarterly instead of annually. Notably, 92% of companies that charge for AI services have adjusted their pricing at least once to account for shifting costs and market trends. Involving cross-functional teams from Product, Finance, and Ethics ensures that your pricing model avoids encouraging problematic behaviors, like incentivizing unnecessary usage.

When implemented thoughtfully, calibrated AI pricing models can increase revenue by 5%–8% and boost customer satisfaction by 15%–20%. Transparency is key: offering calculators or clear examples that translate technical metrics into business value helps customers understand exactly what they’re paying for. When customers trust that your pricing reflects genuine value, they’re more likely to stick around.

Getting Stakeholder Feedback on Pricing Development

Even the most carefully crafted pricing model can overlook ethical concerns. That's why it's crucial to gather input from a wide range of stakeholders - customers, regulators, ethicists, and internal teams - before rolling out your AI pricing strategy. Research shows that 75% of consumers would stop using a company's products if they discovered its AI systems treated certain groups unfairly. By involving diverse perspectives early, you can identify potential issues before they erode trust or attract regulatory scrutiny. Below, we’ll explore practical ways to gather feedback from key groups like customers, regulators, and internal teams.

Engaging Customers

Customers are the ultimate judges of whether pricing feels fair and transparent. To understand their reactions, use tools like usage metrics and customer interviews to dig into the reasons behind their feedback. One effective method is "follow-me-home" interviews, where you observe customers using your product in their natural environment. For example, Intuit used this approach to study how freelancers interacted with its accounting software. These insights led to the creation of QuickBooks Self-Employed, addressing pain points that traditional pricing models had overlooked.

Another useful tool is the 5 Whys technique, which helps uncover the root cause of customer frustrations with pricing. Often, their dissatisfaction stems from a lack of transparency or perceived value. Before launching a new pricing model across the board, test it on a small segment or with new customers. This allows you to evaluate the financial and ethical implications in a controlled environment.

Customer insights are invaluable, but combining them with external expert reviews can further refine your pricing model.

Consulting Regulators and Ethicists

Involving external experts ensures your pricing algorithms meet ethical and regulatory standards. As Philip Chong, Global Digital Controls Leader at Deloitte, explains:

"Data scientists are technologists, not ethicists, therefore it is unrealistic for organizations to expect them to apply an ethical lens to their development activities".

To address this gap, consider setting up independent audits to validate your pricing algorithms. Ensure your models comply with current regulations, such as the EU AI Act and FTC guidelines.

A notable example comes from Salesforce, which used its Office of Ethical and Humane Use of Technology to review AI applications within its Einstein AI platform. Regular bias audits, guided by standardized fairness metrics and an AI ethics advisory board, helped the company boost transparency and prevent discriminatory outcomes. This proactive approach increased customer trust and adoption of their AI pricing tools. Publishing documents like Data Protection Impact Assessments (DPIAs) and Equality Impact Assessments (EIAs) can further demonstrate your commitment to ethical pricing practices.

Collaborating With Internal Teams

Internal collaboration is a critical component of an ethical and effective pricing model. By working across departments, you can align your pricing strategy with both business goals and stakeholder expectations. Sales and Customer Success teams are well-positioned to flag areas where pricing creates friction or disproportionately affects certain customer groups. Finance teams can analyze pricing scenarios to ensure profitability isn’t achieved at the expense of fairness or regulatory compliance. Meanwhile, Data and Engineering teams can identify and mitigate biases in historical datasets and implement safeguards - like rate limits - to prevent sudden cost spikes.

Assigning a Senior Responsible Owner (SRO) to oversee both the ethical and business aspects of your pricing strategy ensures accountability. Additionally, make pricing a regular discussion point for cross-functional teams, including Product, Sales, Finance, Marketing, and Customer Success, to maintain a comprehensive view of its impact.

Using Financial Tools for Ethical AI Pricing

Creating an ethical AI pricing model requires more than just good intentions - it calls for real-time financial insights. The right tools can help you monitor usage, ensure compliance, and communicate pricing decisions clearly to all stakeholders. With global AI spending expected to hit $1.5 trillion by 2025, startups need systems that can adapt to both rapid growth and evolving ethical expectations.

Real-Time Financial Insights: The Key to Sustainable Pricing

To build a sustainable pricing model, you need to understand your true costs per service unit. This includes everything from processing and inference to storage. Financial platforms that track granular usage data - like tokens processed, API calls, or computing hours - help tie pricing directly to service costs. Without this level of detail, companies risk losing margins as they grow, instead of strengthening them.

For instance, Lucid Financials integrates with Slack to deliver instant, AI-driven financial insights. Founders can track unit costs, spending trends, and runway in real time. This allows businesses to quickly identify when pricing becomes unsustainable, whether it's because rates are too low to cover infrastructure expenses or too high to match customer value. These insights make it easier to adjust pricing before small issues turn into big problems.

Another essential step is setting usage guardrails. Automated alerts or soft caps can notify you and your customers when usage spikes unexpectedly. For example, if a customer's API calls suddenly triple, an alert can warn them before they receive a shockingly high invoice. This not only protects your business from unpredictable costs but also builds trust with customers by keeping them informed. Accurate, real-time metrics are the backbone of compliance and transparency.

Ensuring Compliance and Accuracy

Ethical pricing isn’t just about fairness - it also has to meet legal and regulatory requirements. Financial platforms can connect with Internal Control over Financial Reporting (ICFR) frameworks to ensure data completeness and accuracy. Features like data lineage allow you to track the origin and transformation of data, making your pricing logic fully auditable.

Maintaining clear audit trails is equally important. These trails document who accessed pricing data and the evidence behind pricing decisions. For example, the UK's Algorithmic Transparency Recording Standard (ATRS) requires organizations to disclose how and why they use algorithms. To address AI-specific risks - like model bias or infrastructure issues - beyond what standard SOC 1 reports cover, companies should consider providing additional documentation on model training and validation.

Platforms like Lucid Financials simplify compliance by consolidating tax, revenue, and financial data into one system. Their AI monitors transactions for discrepancies, while finance professionals review outputs to ensure they align with accounting standards like ASC 606. This mix of automation and human oversight minimizes the risk of compliance failures that could undermine your ethical pricing model.

Clear Reporting for Stakeholders

Once compliance and accuracy are in place, the next step is communicating clearly with stakeholders. Transparent reporting links usage metrics directly to business value, fostering trust. Automated tools eliminate the need for manual report compilation, instead generating board-ready financials and investor-grade forecasts with a single click.

For AI pricing, it’s crucial to provide stakeholders with clear explanations of how pricing metrics connect to value. Use calculators and examples to show customers exactly what they'll pay based on their usage. For investors, demonstrate how your pricing model balances revenue growth with ethical principles. For instance, you might highlight constraints that prevent discriminatory outcomes or excessive cost spikes.

Lucid Financials makes this process seamless by automating report generation and keeping data updated in real time. Whether you're answering investor questions, preparing for a board meeting, or reviewing pricing strategies with your team, the platform ensures you always have current, actionable data. Its Slack integration even lets you pull specific metrics or create custom reports on demand, without waiting for month-end closes. This instant access to financial data helps you stay accountable to your ethical pricing goals while remaining flexible enough to adapt to changing market conditions.

Conclusion

Creating ethical AI pricing models isn't just about compliance - it's about building trust and ensuring long-term success. Research highlights that 75% of consumers would stop using a product if they found its AI treated certain groups unfairly. On top of that, ethical pricing practices can significantly impact growth, potentially increasing revenue by 12–40%.

To make this happen, focus on the essentials: assemble diverse teams to identify and address biases, verify the accuracy of your data, test thoroughly for any disparities, and ensure customers have a clear understanding of how pricing decisions are made. Regular third-party audits and maintaining pricing guardrails are equally important steps to keep your system accountable.

Ethics and compliance are inseparable. Startups that routinely audit their algorithms are far less likely to face discrimination claims. With regulations like the EU AI Act and growing oversight from agencies like the FTC, taking a proactive approach to ethical AI design isn’t just a good idea - it’s a competitive edge. Businesses that prioritize these practices strengthen customer relationships, safeguard their reputation, and set themselves up for sustainable growth.

The path forward is clear: define responsible AI principles from the very beginning, involving senior leadership in the process. Use tools like model cards to document your system’s functionality and intended use, and encourage employees to raise ethical concerns by linking these efforts to performance evaluations. Real-time financial tools, such as Lucid Financials, add a layer of transparency and continuous compliance to your strategy. By integrating these steps, companies can meet regulatory expectations while fostering customer trust and driving fair, transparent growth.

FAQs

How can companies create AI pricing models that are fair and unbiased?

To develop AI pricing models that are fair and impartial, companies need to tackle potential biases in the training data head-on. Historical data often carries traces of past inequalities, making it essential to identify and address these problems early in the process.

Incorporating fairness and transparency as guiding principles can lead to more ethical and trustworthy outcomes. This means conducting regular audits to assess the model's performance, bringing in diverse voices during the design phase, and being upfront about how pricing decisions are made. These steps not only foster trust with customers but also help ensure pricing practices are more equitable.

How can I make AI pricing models more transparent and ethical?

To ensure AI pricing models are more transparent and ethical, focus on clear policies and making processes easier to understand. Start by creating specific rules and guidelines that outline how your AI systems are built and used. These policies should aim to promote fairness, follow ethical practices, and address any possible biases in the data.

Making the decision-making process of your AI models easier to explain is equally important. Break down how pricing decisions are reached in a way that stakeholders can follow, helping to build trust and accountability. Conduct regular audits of your AI systems to spot and fix any biases or patterns that might lead to unfair outcomes. By combining these efforts, you can develop pricing models that are transparent and ethically sound.

How can businesses ensure their AI pricing models comply with data privacy regulations?

To meet data privacy regulations, businesses should embrace a Privacy by Design framework. This means integrating privacy measures right from the start of system development. Key practices include anonymizing and encrypting user data, applying strict access controls, and following applicable U.S. laws. Keeping privacy policies up to date and being transparent about how data is used are also essential steps in building user trust.

On top of that, businesses need to tackle ethical concerns head-on. Reducing bias in training data and ensuring algorithms operate fairly are critical. Regular privacy impact assessments and audits can uncover potential risks. These efforts not only help maintain compliance but also ensure AI pricing models operate within ethical and legal boundaries.