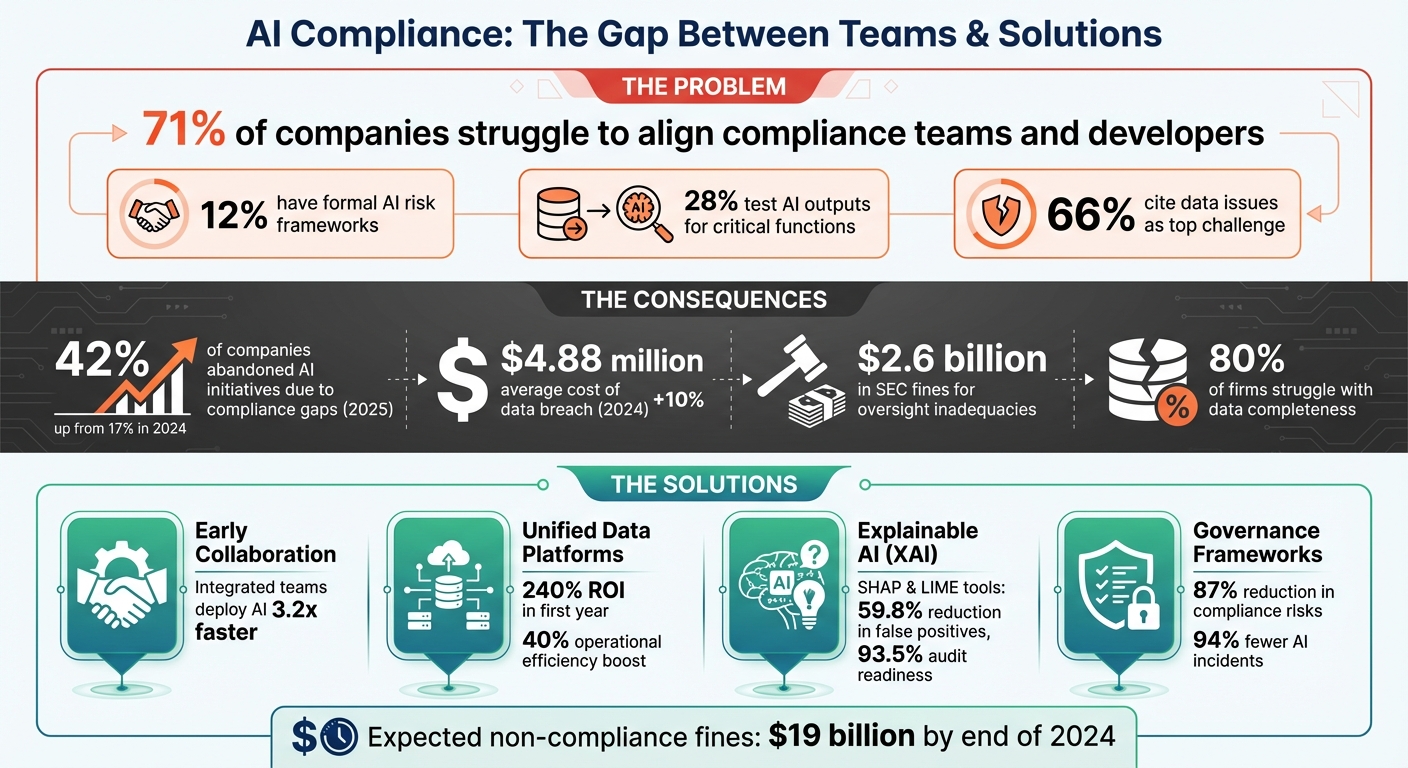

71% of companies using AI struggle to align compliance teams and developers. Why? Compliance focuses on regulations like transparency, while developers need actionable steps to meet those standards. This disconnect, called the "abstract-to-concrete gap", creates risks like biased AI, regulatory penalties, and operational failures.

Key findings include:

- Only 12% of firms have formal AI risk frameworks, leaving many vulnerable.

- 28% test AI outputs for critical functions like fraud detection.

- Disconnected systems and poor data quality are major barriers, with 66% citing data issues as their top challenge.

The solution? Early collaboration, shared vocabularies, unified data platforms, and explainable AI (XAI) tools like SHAP and LIME. These strategies help translate regulations into code, streamline audits, and reduce risks. Firms that invest in integrated governance frameworks and training programs can align teams, cut compliance costs, and build trust in AI systems.

AI Compliance Challenges: Key Statistics and Solutions for Bridging Teams

The Knowledge Gap Between Compliance and Development Teams

Why the Knowledge Gap Exists

Connecting compliance and development teams is essential to balancing regulatory requirements with the need for innovation. Compliance teams focus on adhering to legal standards, while developers aim to achieve technical excellence. This difference in priorities makes it challenging to turn abstract regulatory concepts - like transparency or explainability - into actionable code.

Traditional compliance frameworks are built around predictable systems, which don’t align well with the dynamic and probabilistic nature of modern AI models. Unlike static outcomes, AI systems rely on probabilistic logic and constant retraining to improve over time. Yaniv Sayers, Chief Architect at OpenText, highlights this challenge:

"Traditional compliance frameworks often lag behind AI innovation, and governance models built for deterministic systems struggle with probabilistic outputs and autonomous decision-making."

Data issues further complicate the divide. A staggering 66% of compliance and risk leaders identify poor data quality or access as their top obstacle in implementing AI. Additionally, 54% cite a lack of expertise, and only 42% of compliance professionals express confidence in AI-generated outputs. Developers often don’t have insight into the training data sourced from external components, and this lack of transparency creates significant hurdles for compliance teams trying to audit AI systems.

When these teams operate in silos, the result is often operational inefficiency and increased risk.

What Happens When Teams Don't Align

When compliance and development teams fail to collaborate, the consequences extend beyond technical challenges to operational instability. A striking example occurred in February 2026, when a financial services company experienced weekly outages caused by AI-generated code. In this case, poor data quality and a lack of accountability led to integration errors and oversight failures, increasing the risk of system breakdowns.

One of the core issues is the difference in how these teams approach oversight. Compliance teams tend to rely on static, point-in-time evaluations, while AI systems evolve continuously between releases. This disconnect can result in regulatory blind spots. For instance, developers may deploy streamlined models with fewer safety measures to enhance performance, leaving high-stakes systems without adequate oversight.

The challenges of verifying AI compliance are both time-consuming and costly. On average, it takes 1–2.5 days and costs between $3,000 and $7,500 to audit a single AI system. Mohan Krishna, Head of Product Innovation at konaAI, sums up the situation:

"Compliance teams see AI as inevitable and necessary, but they are constrained by governance risk, data quality, regulatory uncertainty, and skills gap."

Without alignment, these gaps can lead to systemic vulnerabilities that are difficult - and expensive - to address.

sbb-itb-17e8ec9

AI Compliance & Risk Management: Best Practices with Santosh Kaveti

Data Silos Block Effective Collaboration

Fragmented data systems are a major roadblock to bridging the gap between compliance and development teams.

Problems with Disconnected Data Systems

When compliance and development teams operate in isolated systems, essential information gets trapped in silos. This isolation creates significant challenges - 80% of firms report struggling with data completeness, a basic necessity for audit readiness. Questions requiring real-time financial insights like “Which models processed customer data this month?” or “Who accessed sensitive information at each stage?” become difficult to answer.

The stakes are high. In 2024, the average cost of a data breach reached $4.88 million, marking a 10% increase from the previous year. In the U.S., the SEC has imposed $2.6 billion in fines on firms for inadequacies in communications management and oversight. These figures highlight the financial risks of fragmented systems.

Disconnected systems also force companies into inefficient and expensive workarounds. Governance often has to be retrofitted onto existing pipelines, which is both error-prone and costly. Engineering teams, lacking proper legal expertise, are left to interpret privacy policies and convert them into code. Meanwhile, compliance teams are stuck with manual reviews, juggling tools like GitHub or MLFlow to gather evidence and ensure governance.

The Ethyca Team captures the challenge perfectly:

"60% of leaders cite legacy infrastructure and compliance complexity as top barriers to scaling AI. But beneath that stat is a deeper issue. The processes designed to manage risk are now generating it."

Clearly, overcoming these inefficiencies requires breaking down data silos and centralizing systems.

Why Unified Data Platforms Matter

Unified data platforms offer a solution to the issues caused by disconnected systems. By consolidating tools for surveillance, archiving, and AI governance into a single platform, organizations can create a single source of truth. This shift not only lowers costs but also improves audit readiness. Companies transitioning from manual processes to automated governance have reported an average ROI of over 240% in the first year.

Consolidation also enables end-to-end traceability through metadata and lineage tracking, making it possible to document exactly how data flows from source systems to AI models. This level of visibility is crucial for meeting regulatory requirements, such as "N-1" look-back capabilities, which allow organizations to verify which rules were active at any given time. Automated log reviews further enhance efficiency, boosting operational performance by approximately 40% compared to manual methods. This shift allows compliance teams to focus on higher-level strategic oversight rather than administrative tasks.

The move toward unified systems mirrors a larger industry trend. Over the past three years, 70% of corporate compliance professionals have shifted from "checkbox compliance" to proactive, strategic approaches. ShieldFC emphasizes this shift:

"Data fragmentation is no longer tolerable. Regulators expect unified, defensible frameworks, making unification, responsible AI, and complete data governance non-negotiable."

For organizations aiming to scale AI responsibly while staying compliant, unified data platforms are no longer optional - they’re essential.

Making AI Models Transparent for Regulators

Once data is unified, the next hurdle is making sure regulators can clearly see how AI decisions are made. Transparency in AI models builds on unified data platforms, strengthening both compliance and trust. Just as unified data addresses fragmented information, transparent models help align regulatory requirements with technical execution.

The 'Black Box' Problem Explained

The "black box" problem describes AI systems where the process of turning inputs into outputs is hidden from both users and developers. This lack of visibility becomes especially risky in critical fields like credit lending, parole assessment, and Medicaid eligibility, where opaque decision-making can lead to bias and errors.

Regulators are starting to address this issue. For instance, the EU AI Act, effective February 2, 2025, requires transparency and human oversight for high-risk AI systems. Noncompliance can result in fines up to €35 million or 7% of global annual revenue. However, traditional frameworks like SR 11-7 weren't designed to handle the complexities of evolving, autonomous systems, leaving many organizations struggling to adapt. As RayCor Consulting puts it:

"If you can't explain it, you can't defend it. If you can't audit it, you can't trust it. And if you can't control it, it doesn't belong in your compliance program."

The stakes are high. In 2025, 42% of companies abandoned AI initiatives due to compliance gaps, a sharp jump from 17% in 2024. Legal safeguards like the "right to an explanation" are becoming essential, ensuring that individuals affected by AI decisions can understand the reasoning behind them.

Using Explainable AI (XAI)

Explainable AI (XAI) offers a way forward by providing clear, auditable explanations for AI decisions. Tools like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) help compliance teams trace decisions - like risk scores - back to specific inputs, such as transaction types or customer histories. This approach resolves the "compliance paradox", where organizations must balance analytical power with regulatory clarity.

In September 2025, Naga Srinivasulu Gaddapuri introduced a Cognitive Regulatory Engine that combined XAI with Graph Neural Networks. Tested on 15 million historical transactions from Tier-1 banks, the system reduced false positives by 59.8% and achieved a 93.5% audit readiness rating. It also cut manual documentation efforts by 68% by offering regulator-friendly explanations.

Building explainability into models from the start is critical, as retrofitting later can cost 2–3 times more. Organizations should prioritize inherently interpretable models, like linear regression or decision trees, for high-stakes applications. More complex models can then serve as "challengers" to refine features and improve performance. This hybrid strategy ensures every decision is traceable, balancing transparency with analytical effectiveness. By embedding XAI early, companies not only clarify how decisions are made but also foster stronger collaboration between compliance and development teams.

How to Build Cross-Functional Collaboration

Transparent AI models alone can’t drive success without strong teamwork across departments. While unified data and clear models tackle technical challenges, effective collaboration ensures these tools meet regulatory demands. According to a 2024 Workday report, only 62% of business leaders and 55% of employees feel confident their organizations will use AI responsibly. This gap in trust often arises from misaligned goals between compliance and development teams.

Forming Integrated Teams

The traditional method of having developers create models and then passing them off for compliance review is outdated. Instead, companies need to adopt integrated team structures where compliance officers, AI developers, and data scientists work together from the start. Take Microsoft, for example. They’ve established an Office of Responsible AI, led by a Chief Responsible AI Officer and supported by a Responsible AI Council chaired by the CTO and President. This structure includes an internal portal that ensures every AI project undergoes mandatory assessments and approvals. Similarly, Workday created a dedicated Responsible AI team in 2025 that reports directly to the Chief Legal Officer. This team is backed by an executive advisory board that meets monthly to align AI efforts with ethical standards and global regulations.

Tools like RACI charts and the "Three Lines of Defense" model can help define roles and responsibilities across the AI lifecycle. Instead of compliance acting as a last-minute gatekeeper, it becomes a strategic partner throughout the process. This integrated setup not only improves collaboration but also sets the stage for effective training and shared understanding, which is explored further below.

Training Programs for Both Teams

Creating a mutual understanding between compliance and development teams is key to long-term success. Compliance professionals need to build basic machine learning knowledge, while developers must grasp regulatory requirements. This dual-focus training bridges the gap between compliance and development identified earlier. By early 2026, over 50% of compliance officers were using or experimenting with AI, compared to just 30% in 2023.

Training programs should be tailored to specific roles and focus on practical applications. Executives can concentrate on strategy and risk management, developers on techniques like fairness and privacy, and legal teams on AI governance and regulations. Scenario-based exercises - such as bias simulations or mock audits - prepare teams for real-world challenges. Many organizations are also creating shared AI glossaries to ensure consistent understanding of terms like "high-risk." As Lumenova AI highlights:

"The real risks aren't always embedded in the models themselves, but in the disconnects between departments".

Centralized training materials, stored alongside risk assessments and model cards, offer a single "source of truth" for all stakeholders. This approach transforms compliance from a simple checklist into a shared responsibility.

Creating AI Governance Frameworks

Training and integration may lay the groundwork for AI compliance, but structured governance is what turns good intentions into actionable, enforceable practices. A formal governance framework doesn’t just assign responsibilities - it aligns technical and compliance teams under one cohesive strategy. Here’s a startling fact: 73% of organizations lack comprehensive AI governance frameworks, leaving them exposed to fines and reputational risks. On the other hand, companies with mature frameworks deploy AI systems 3.2 times faster and cut compliance risks by 87%.

What Goes Into AI Governance

A robust AI governance framework typically revolves around four key areas:

- Model Risk Management: For high-stakes applications like credit approvals, algorithmic trading, or anti-money laundering, AI models must undergo rigorous validation.

- Data Governance: Ensuring the integrity and lineage of training data is critical. Automated tools can scan for statistical biases, particularly those tied to protected characteristics, before they lead to problems.

- Record-Keeping: Regulations like MiFID II and SEC Rule 17a-4 require tamper-proof logs that can trace every AI-driven decision back to its origin.

- Human Oversight: High-risk scenarios demand "circuit-breakers" that let human operators intervene or override AI decisions when necessary.

Formal governance also strengthens accountability by standardizing documentation and assigning clear oversight roles. For instance, tools like Model Cards and explainability frameworks such as SHAP or LIME can help clarify how decisions are made. A RACI matrix ensures accountability, while appointing a Chief AI Officer provides centralized oversight. In sectors like financial services, it’s essential to remember that AI systems must meet the same regulatory standards as human-led processes, covering areas like suitability, fairness, and marketing conduct.

Once these foundational elements are in place, the challenge becomes keeping the framework aligned with ever-changing regulations.

Maintaining Compliance Over Time

A strong governance framework isn’t static - it must evolve as regulations shift. For example, the EU AI Act applies to any company with AI systems impacting individuals in the EU, no matter where the company is based. The European Commission has made it clear: regulatory expectations don’t pause. In the U.S., updated federal guidance (OMB Memorandum M-25-21, effective February 2025) focuses on reducing unnecessary barriers while upholding safety standards.

To keep pace, dynamic governance relies on three critical strategies:

- Continuous Monitoring: Real-time tracking of model performance, data drift, and emerging biases can reduce AI-related incidents by 94% and cut resolution times from 6.7 days to just 2.3 hours.

- Automated Regulatory Mapping: Using compliance tools with pre-built templates for frameworks like the EU AI Act, ISO/IEC 42001, and NIST AI RMF helps organizations stay current with regulatory changes automatically.

- Lifecycle Gates: Embedding governance checkpoints at every stage of the AI lifecycle - from ideation to deployment - can boost project success rates from 54% to 89%.

Conclusion

Blending compliance with development isn’t just about meeting regulations - it’s about fostering trust in AI systems. Forward-thinking organizations are moving away from the outdated "build first, consult legal later" mindset. Instead, they’re embracing collaboration from the start. When compliance teams and developers align on shared goals, use unified data platforms, and follow clear governance structures, what might seem like friction becomes a powerful advantage.

For example, companies that digitize their compliance policies have reported over 240% ROI in their first year. On the flip side, ignoring these practices can be costly - non-compliance fines are expected to soar to $19 billion by the end of 2024.

"Effective compliance isn't about checking boxes when auditors arrive - it's about building audit-ready processes from day one." - Jeff Ward, Partner, Aprio

FAQs

What should an AI risk framework include?

An AI risk framework needs to tackle major risks while ensuring adherence to regulations such as the EU AI Act and GDPR. It should incorporate a risk taxonomy, well-structured policies, and governance controls. Strong frameworks also include tools for risk identification, monitoring, and transparency, paired with human oversight and lifecycle management. These components help align advancements in AI with ethical and legal standards, building systems that people can trust.

How can we prove an AI model is explainable to regulators?

To help regulators understand an AI model's reasoning, focus on clear and transparent explanations of how decisions are made. Break down the logic behind the model's outputs and ensure every step in the decision-making process is traceable. Providing source citations and documenting the origins of data used in the model further establishes credibility. This approach not only makes the system's processes easier to follow but also ensures compliance by keeping its logic and outcomes verifiable.

What’s the fastest way to break down AI data silos?

The fastest way to break down AI data silos is by encouraging teamwork between legal, compliance, and technical teams. When these groups work together using integrated models, it becomes easier to manage and audit AI systems. This kind of collaboration ensures departments stay aligned, supports regulatory compliance, and boosts overall operational efficiency.